The core question

In the past, the degree of curriculum differentiation needs a teacher must accomplish for their class was practically impossible. With the growing promise of AI, can we create a tool that finally scales a teachers ability to differentiate efficiently and effectively?

Project facts

Timeline

Kicked off in Jun 2023, piloted Feb 2024

My role

Product design, UX strategy, user research, creative technology

Team configuration

PM, UX, data science, learning science, engineering

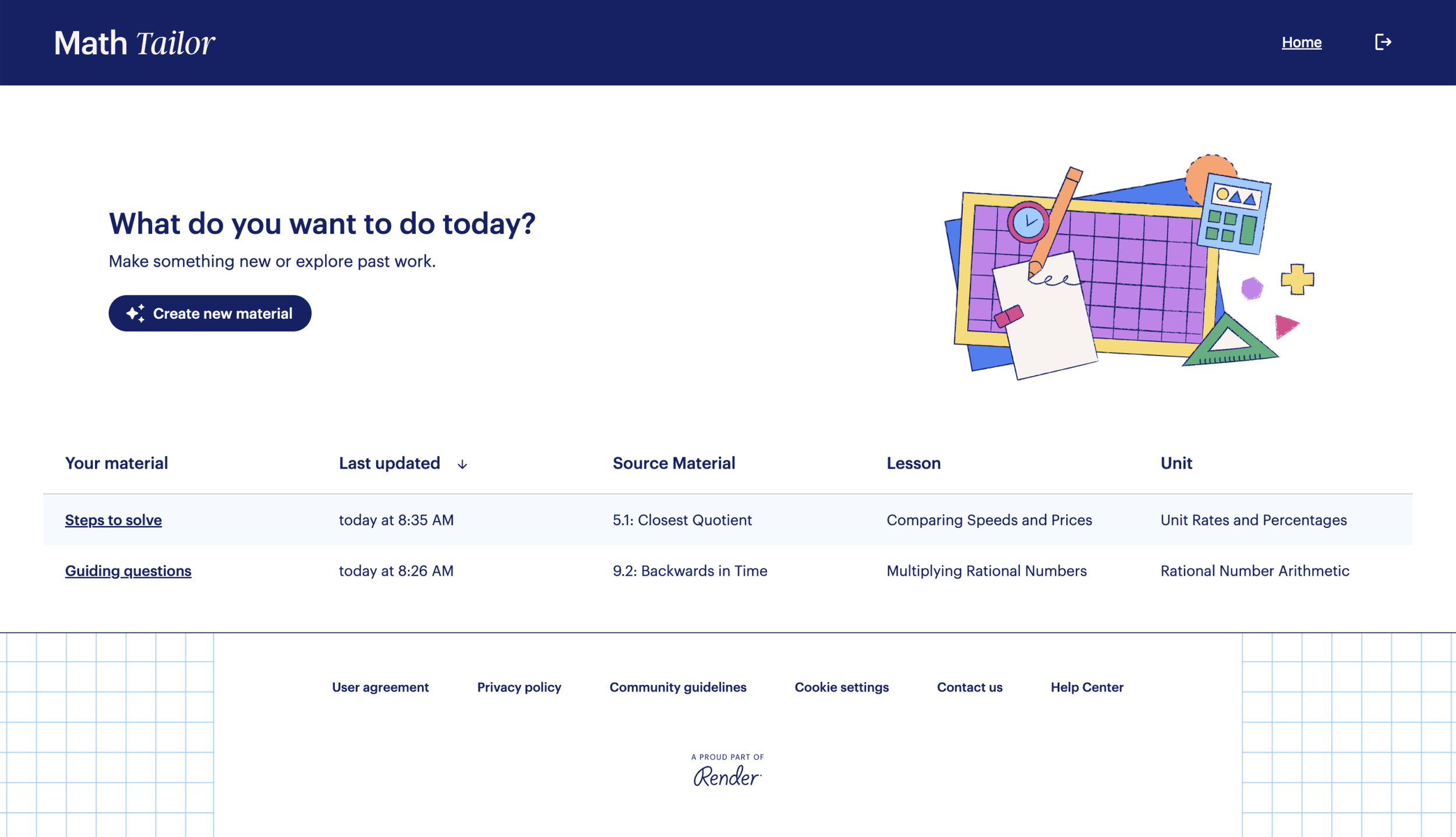

Render homepage, the co-building program where Math Tailor piloted.

Project kick-off and framing

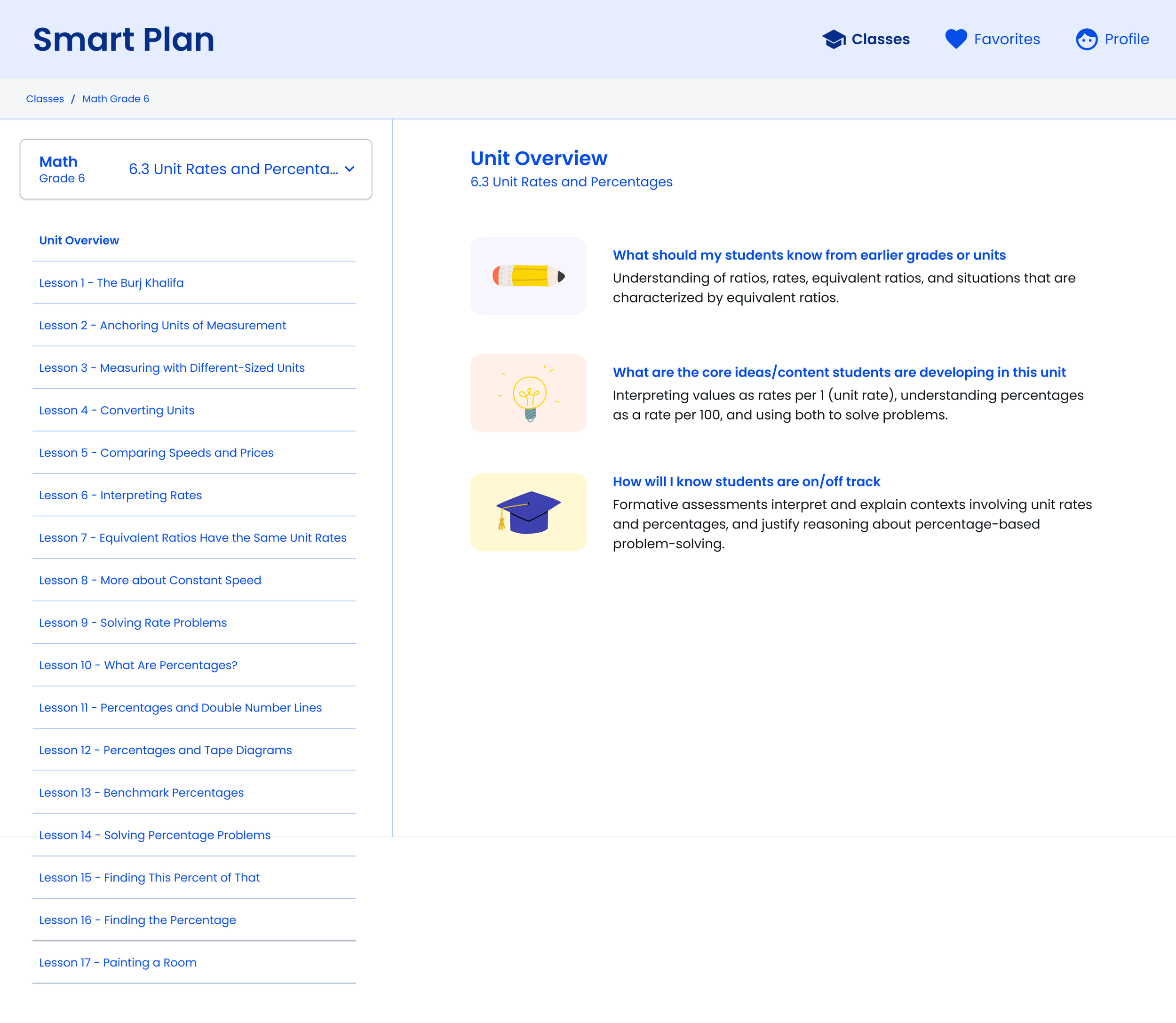

Math Tailor was born out of a critical design sprint that yielded 3 new product opportunities. It was conceptualized from CZI’s prior work in curriculum differentiation and heavily influenced by TNTP’s The Opportunity Myth.

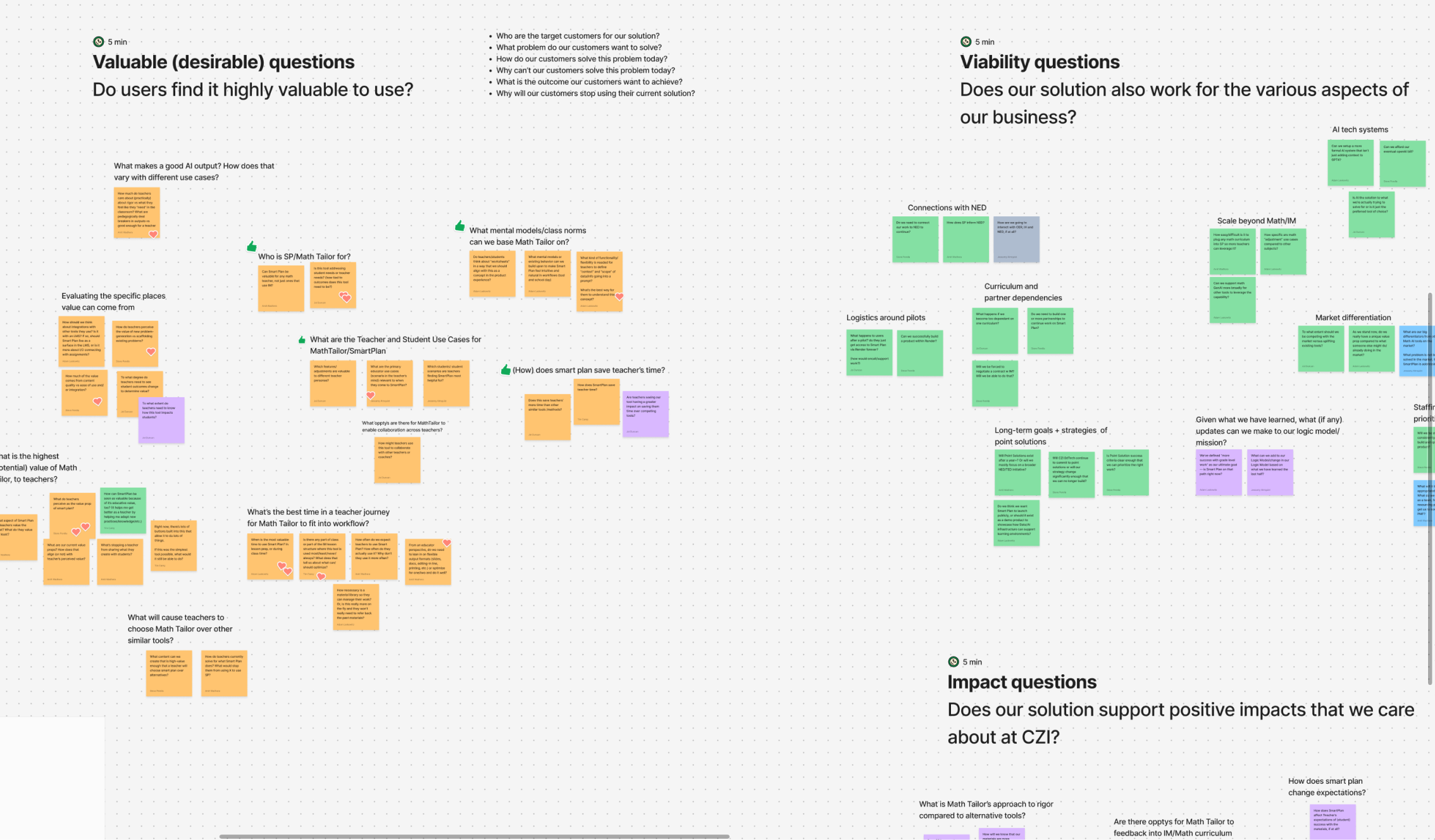

I typically start 0 to 1 initiatives off with assumption mapping that helps to establish near-term learning goals and foster team alignment.

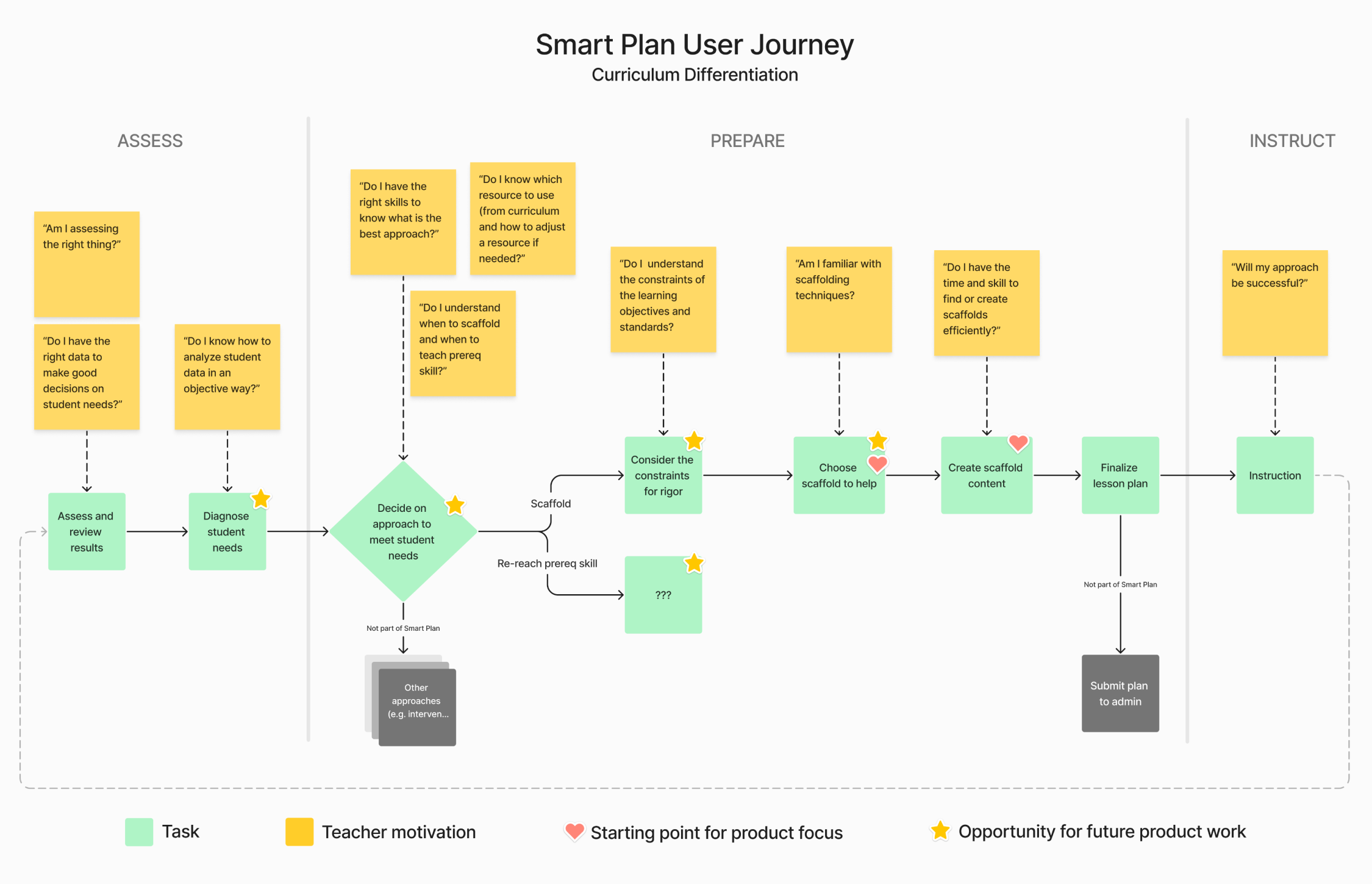

The assumption mapping helped us to identify the most important aspects of a teacher’s differentiation journey (we had learned in prior research) where the biggest opportunities existed to support teachers and students.

Product direction

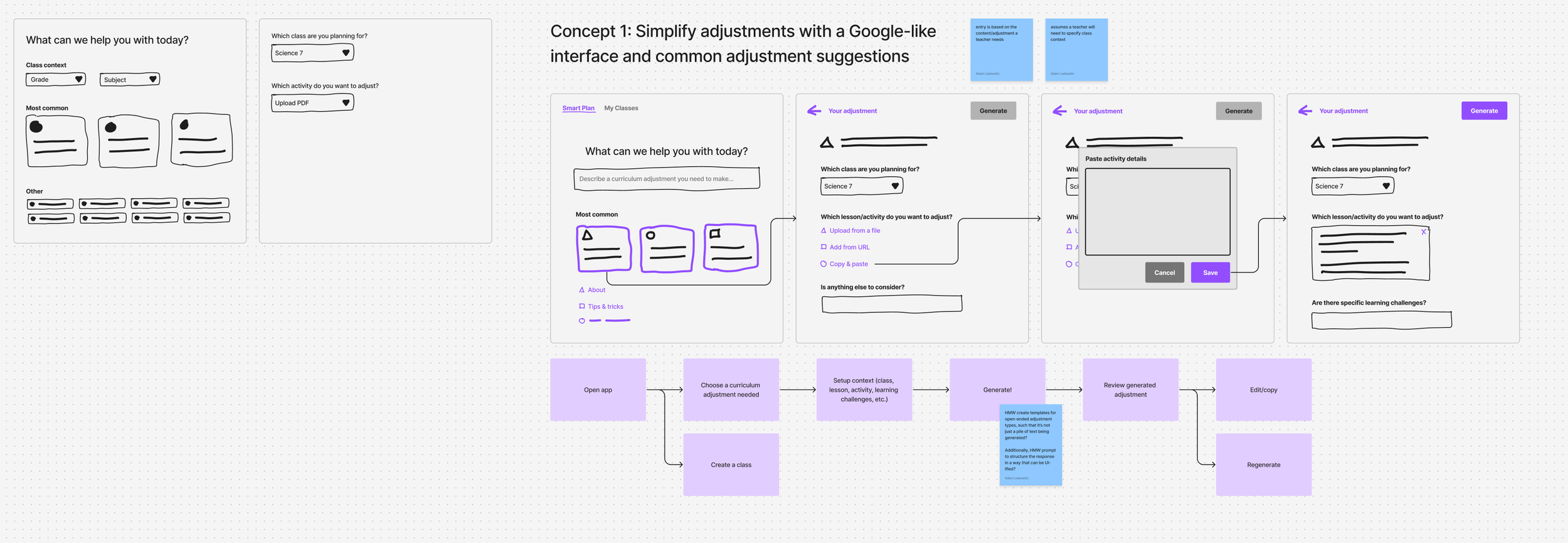

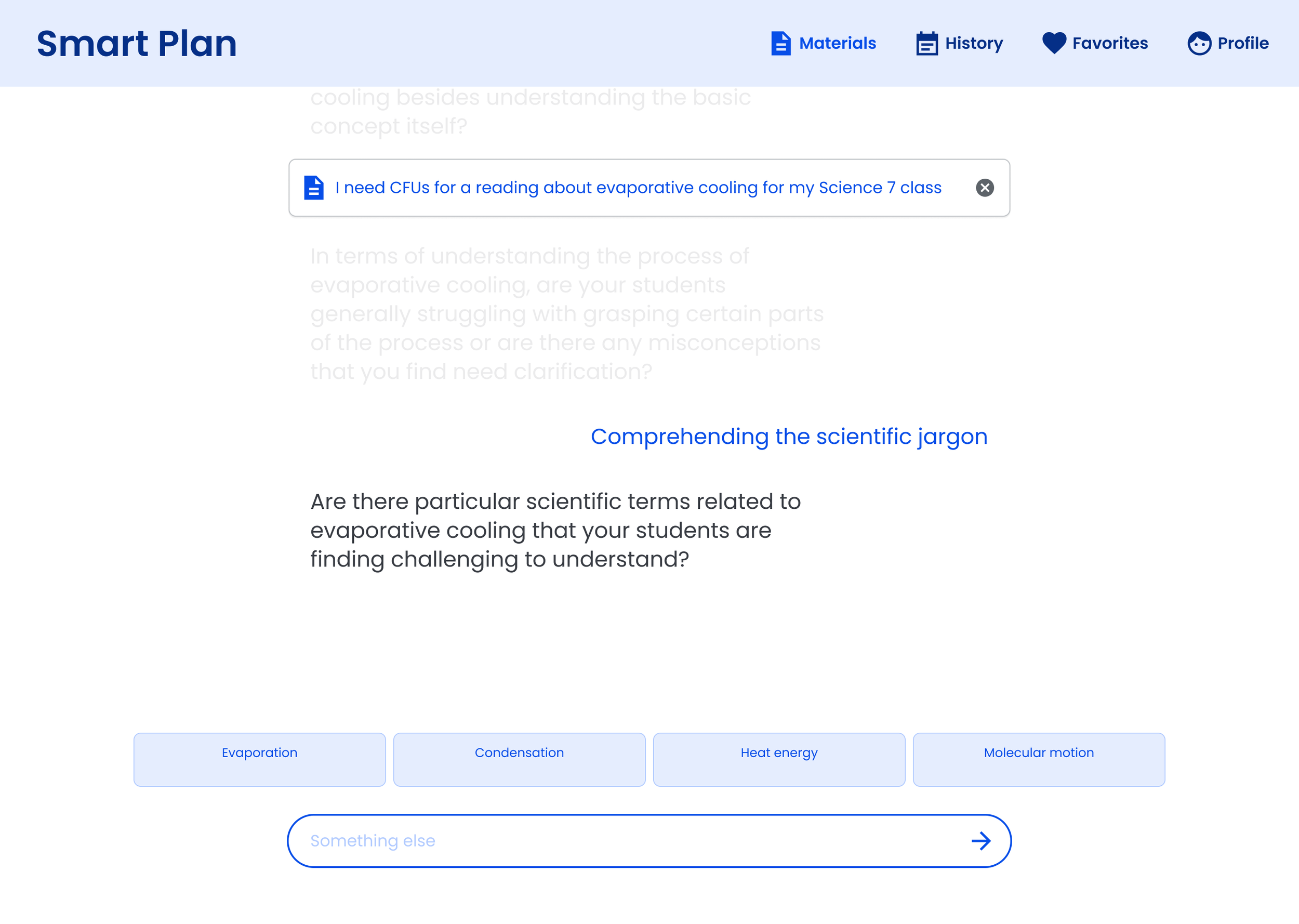

Working iteratively through user research and collaboration with engineering I explored several concepts that helped us focus on a direction for the product strategy and teacher experience.

A major conceptual challenge

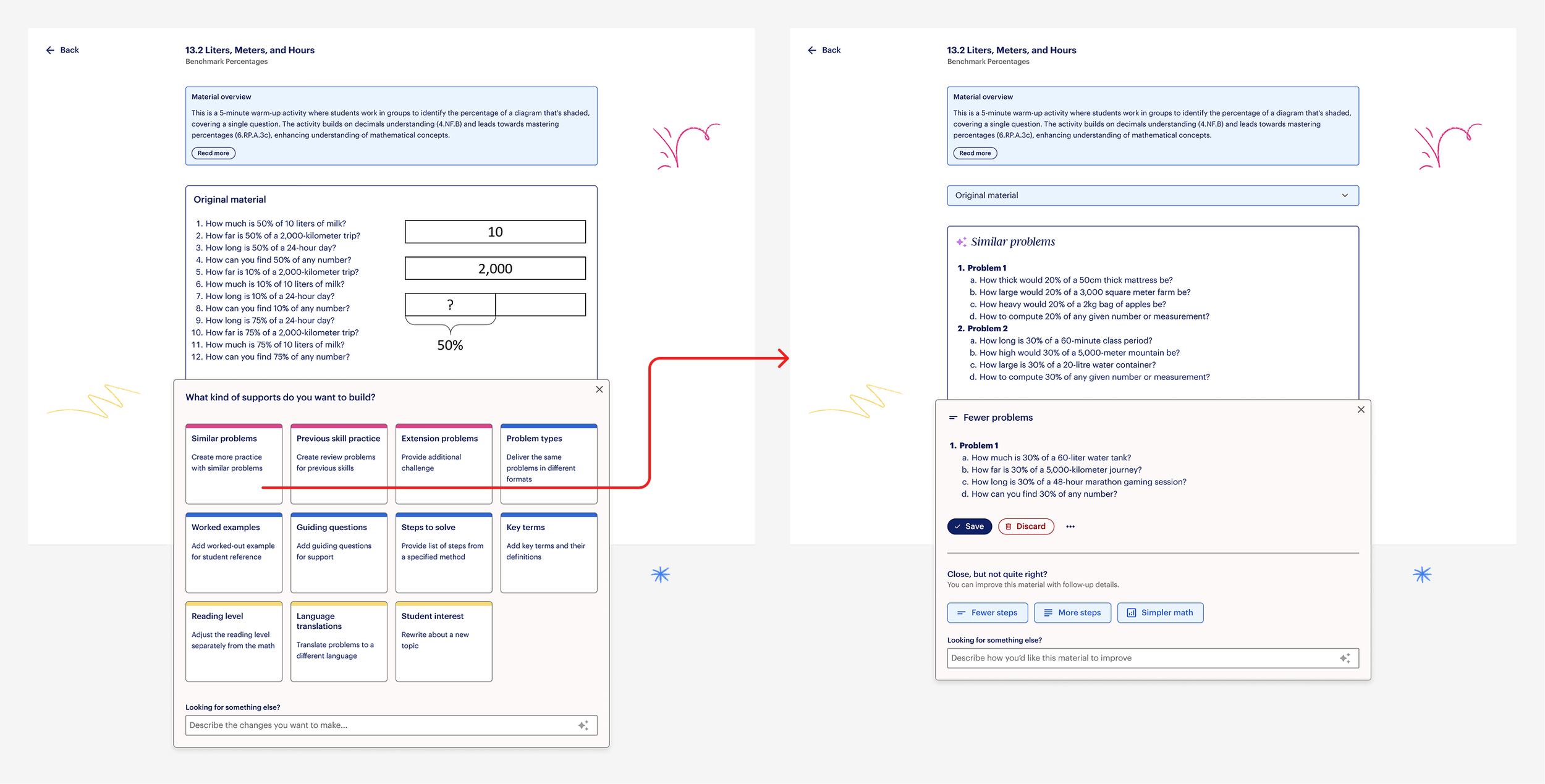

At the time of designing this product, LLMs were extremely nascent, with industry-level UX patterns completely undefined. It meant that the information architecture, mental models, interactions, and expectations for both data and users could easily create confusion. To add to the confusion, Math Tailor was not only an LLM-based product, but it also fundamentally relied on a user building new versions of existing content. The emerging AI patterns in the industry really focused on generating new content. We had to design something that did not exist.

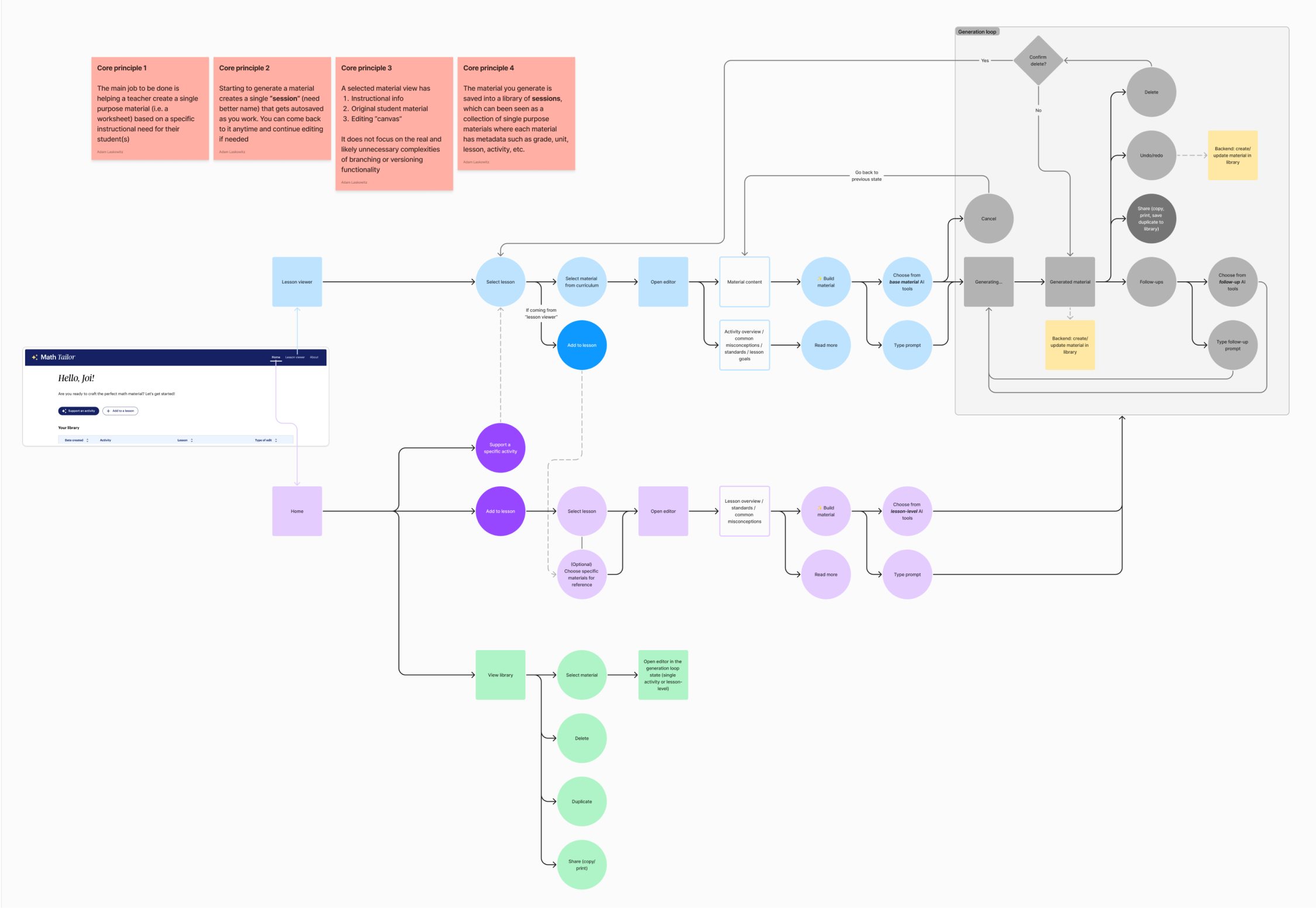

The team had many lengthy internal debates about how the backend and data in the product should be modeled and what the implications of that would be on the user experience. I ultimately helped to resolve these debates by doing 2 things:

Define “core principles” of the product that helped create clarity around data models and concepts such as: sessions, worksheets, collections, etc.

Imbue those principles in the user flows of the product to communicate with the team and define the architecture-level product requirements.

Architecture and interaction design

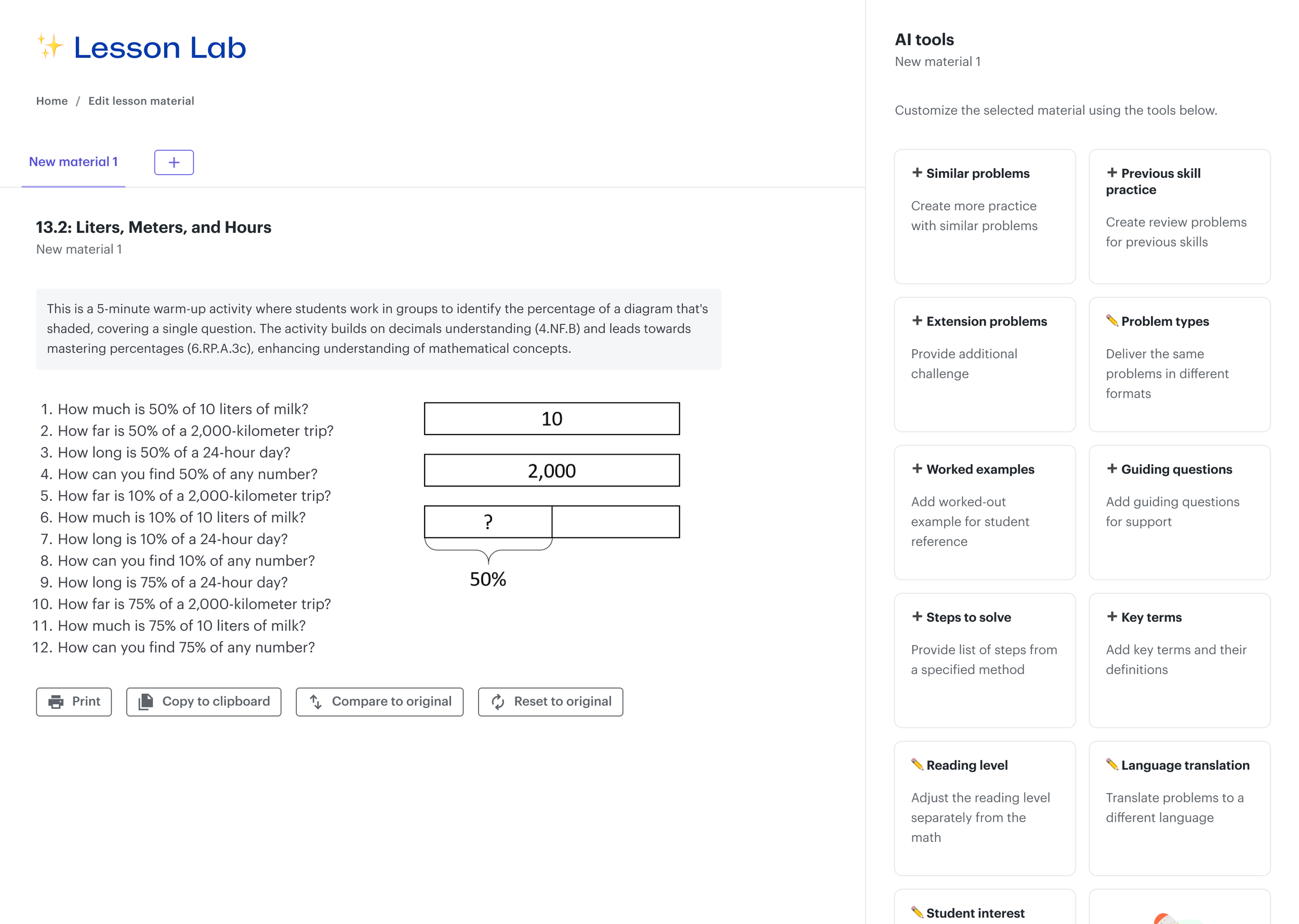

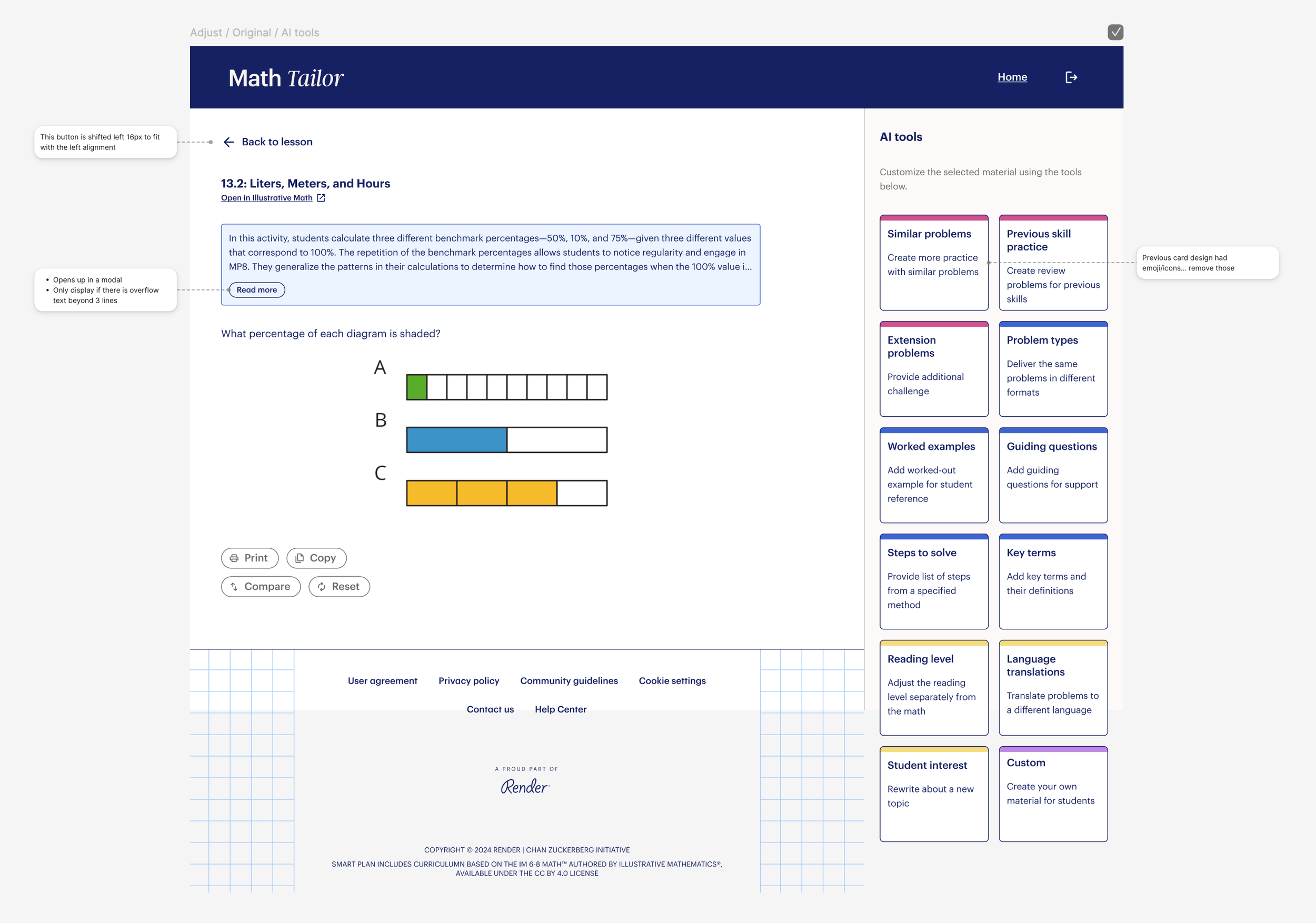

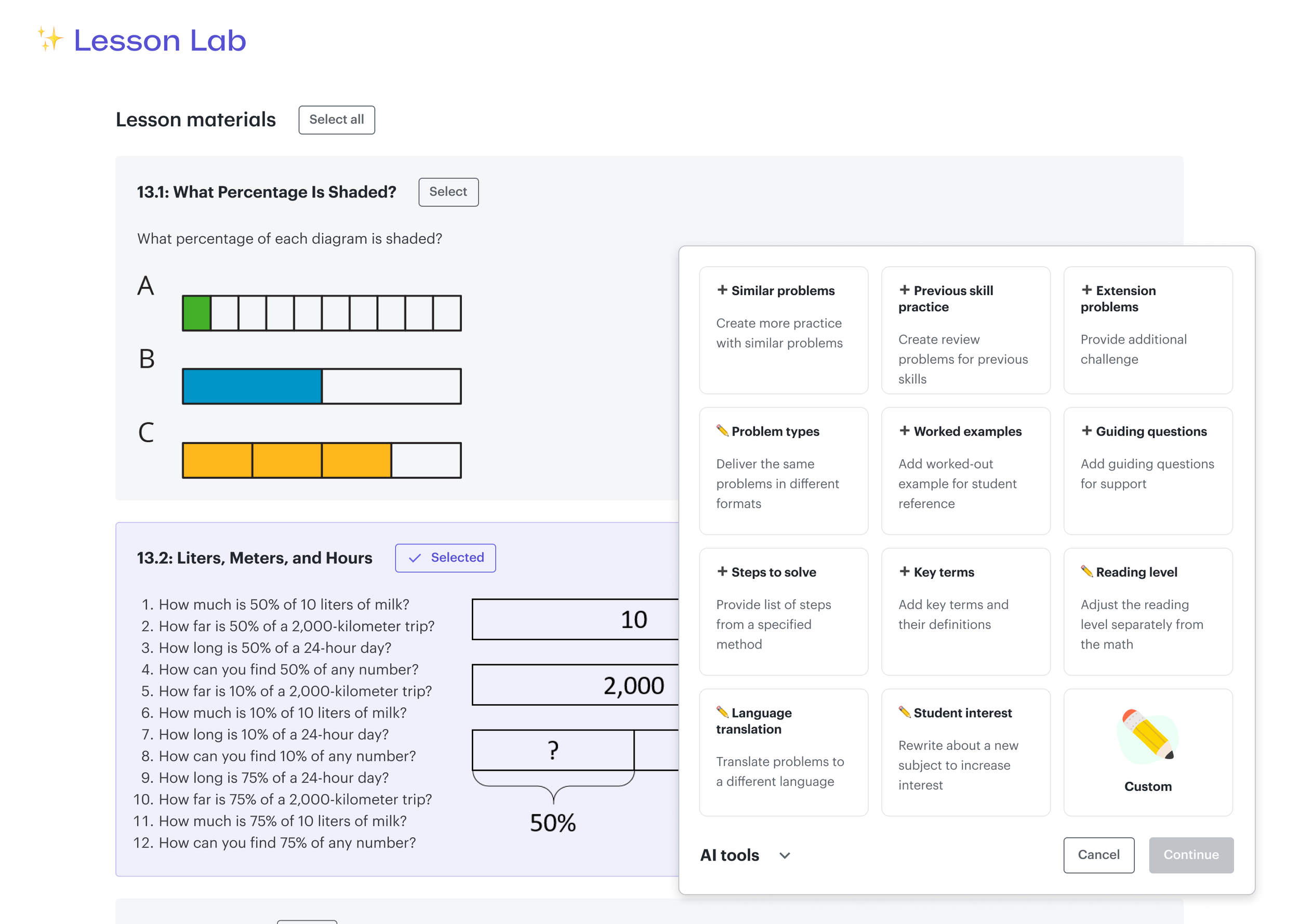

Once a core concept was aligned with user needs and the product team, I worked through the detailed features and user interactions and ultimately scope out an MVP with PM and engineering.

The fixed panel design paradigm was too rigid for the AI workflow. I had to try something new that would set up a design architecture that could intuitively allow for the types of LLM-based edits and versions our users needed to create with Math Tailor. I took inspiration from some emerging doc editing designs in Google Docs, Notion, and Confluence.

Learning for the whole org

Since much of my work on Math Tailor was new to the organization, I maintained an internal blog documenting my use of AI both as a design tool and as a key part of the user experience.

An example of ways I documented my experiments using LLMs, exploring how to leverage them in product experiences.